A new artificial intelligence (AI) model has justachieved human-level resultson a test designed to measure general intelligence.

It also scored well on a very difficult mathematics test.

Creating artificial general intelligence, or AGI, is the stated goal of all the major AI research labs.

© Photo by Michael M. Santiago/Getty Images

At first glance, OpenAI appears to have at least made a significant step towards this goal.

While scepticism remains, many AI researchers and developers feel something just changed.

For many, the prospect of AGI now seems more real, urgent and closer than anticipated.

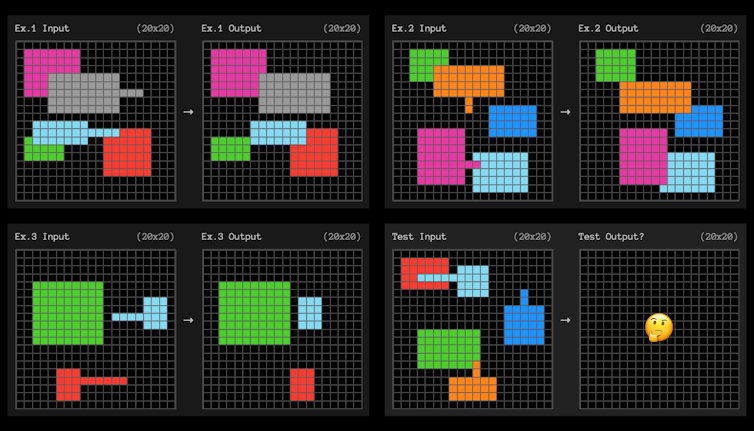

An example task from the ARC-AGI benchmark test.

An AI system like ChatGPT (GPT-4) is not very sample efficient.

The result is pretty good at common tasks.

It is bad at uncommon tasks, because it has less data (fewer samples) about those tasks.

It is widely considered a necessary, even fundamental, element of intelligence.

Each question gives three examples to learn from.

The AI system then needs to figure out the rules that generalise from the three examples to the fourth.

These are a lot like the IQ tests sometimes you might remember from school.

From just a few examples, it finds rules that can be generalised.

What do we mean by the weakest rules?

The technical definition is complicated, but weaker rules are usually ones that can bedescribed in simpler statements.

Searching chains of thought?

However, to succeed at the ARC-AGI tasks it must be finding them.

It would then choose the best according to some loosely defined rule, or heuristic.

you’re free to think of these chains of thought like programs that fit the examples.

There could be thousands of different seemingly equally valid programs generated.

That heuristic could be choose the weakest or choose the simplest.

However, if it is like AlphaGo then they simply had an AI create a heuristic.

This was the process for AlphaGo.

Google trained a model to rate different sequences of moves as better or worse than others.

What we still dont know

The question then is, is this really closer to AGI?

If that is how o3 works, then the underlying model might not be much better than previous models.

The concepts the model learns from language might not be any more suitable for generalisation than before.

The proof, as always, will be in the pudding.

Almost everything about o3 remains unknown.

We will require new benchmarks for AGI itself and serious consideration of how it ought to be governed.

If not, then this will still be an impressive result.

However, everyday life will remain much the same.

News from the future, delivered to your present.

Two banks say Amazon has paused negotiations on some international data centers.